Recently Filip Krikava made a fork on github and created a Juno branch using

the latest commit +

Master https://github.com/stackforge/nova-docker.git is targeting latest Nova ( Kilo release ), forked branch is supposed to work for Juno , reasonably including commits after "Merge oslo.i18n". Posting bellow is supposed to test Juno Branch https://github.com/fikovnik/nova-docker.git

Quote ([2]) :-

The Docker driver is a hypervisor driver for Openstack Nova Compute. It was introduced with the Havana release, but lives out-of-tree for Icehouse and Juno. Being out-of-tree has allowed the driver to reach maturity and feature-parity faster than would be possible should it have remained in-tree. It is expected the driver will return to mainline Nova in the Kilo release.

Install required packages to install nova-docker driver per https://wiki.openstack.org/wiki/Docker

*****************************************************

As of 11/12/2014 third line may be per official fork

https://github.com/stackforge/nova-docker/tree/stable/juno

# git clone https://github.com/stackforge/nova-docker *****************************************************

***************************

Initial docker setup

***************************

# yum install docker-io -y

# yum install -y python-pip git

# git clone https://github.com/fikovnik/nova-docker.git

# cd nova-docker

# git branch -v -a# master 1ed1820 A note no firewall drivers.

remotes/origin/HEAD -> origin/master

remotes/origin/juno 1a08ea5 Fix the problem when an image

is not located in the local docker image registry.

remotes/origin/master 1ed1820 A note no firewall drivers.

# git checkout -b juno origin/juno

# python setup.py install

# systemctl start docker

# systemctl enable docker

# chmod 660 /var/run/docker.sock

# pip install pbr

# mkdir /etc/nova/rootwrap.d******************************

Update nova.conf

******************************

vi /etc/nova/nova.conf

set "compute_driver = novadocker.virt.docker.DockerDriver"

************************************************

Next, create the docker.filters file:

************************************************

vi /etc/nova/rootwrap.d/docker.filters

Insert Lines

# nova-rootwrap command filters for setting up network in the docker driver

# This file should be owned by (and only-writeable by) the root user

[Filters]

# nova/virt/docker/driver.py: 'ln', '-sf', '/var/run/netns/.*'

ln: CommandFilter, /bin/ln, root

*****************************************

Add line /etc/glance/glance-api.conf

*****************************************

container_formats=ami,ari,aki,bare,ovf,ova,docker

:wq

************************

Restart Services

************************

usermod -G docker nova

systemctl restart openstack-nova-compute

systemctl status openstack-nova-compute

systemctl restart openstack-glance-api

******************************

Verification docker install

******************************

[root@juno ~]# docker run -i -t fedora /bin/bash

Unable to find image 'fedora' locally

fedora:latest: The image you are pulling has been verified

00a0c78eeb6d: Pull complete

2f6ab0c1646e: Pull complete

511136ea3c5a: Already exists

Status: Downloaded newer image for fedora:latest

bash-4.3# cat /etc/issue

Fedora release 21 (Twenty One)

Kernel \r on an \m (\l)

[root@juno ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

738e54f9efd4 fedora:latest "/bin/bash" 3 minutes ago Exited (127) 25 seconds ago stoic_lumiere

14fd0cbba76d ubuntu:latest "/bin/bash" 3 minutes ago Exited (0) 3 minutes ago prickly_hypatia

ef1a726d1cd4 fedora:latest "/bin/bash" 5 minutes ago Exited (0) 3 minutes ago drunk_shockley

0a2da90a269f ubuntu:latest "/bin/bash" 11 hours ago Exited (0) 11 hours ago thirsty_kowalevski

5a3288ce0e8e ubuntu:latest "/bin/bash" 11 hours ago Exited (0) 11 hours ago happy_leakey

21e84951eabd tutum/wordpress:latest "/run.sh" 16 hours ago Up About an hour nova-bf5f7eb9-900d-48bf-a230-275d65813b0f

*******************

Setup Wordpress

*******************

# docker pull tutum/wordpress# . keystonerc_admin # docker save tutum/wordpress | glance image-create --is-public=True --container-format=docker --disk-format=raw --name tutum/wordpress[root@juno ~(keystone_admin)]# glance image-list

+--------------------------------------+-----------------+-------------+------------------+-----------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+--------------------------------------+-----------------+-------------+------------------+-----------+--------+

| c6d01e60-56c2-443f-bf87-15a0372bc2d9 | cirros | qcow2 | bare | 13200896 | active |

| 9d59e7ad-35b4-4c3f-9103-68f85916f36e | tutum/wordpress | raw | docker | 517639680 | active |

+--------------------------------------+-----------------+-------------+------------------+-----------+--------+********************

Start container

********************

$ . keystonerc_demo

[root@juno ~(keystone_demo)]# neutron net-list

+--------------------------------------+--------------+-------------------------------------------------------+

| id | name | subnets |

+--------------------------------------+--------------+-------------------------------------------------------+

| ccfc4bb1-696d-4381-91d7-28ce7c9cb009 | private | 6c0a34ab-e3f1-458c-b24a-96f5a2149878 10.0.0.0/24 |

| 32c14896-8d47-4a56-b3c6-0dd823f03089 | public | b1799aef-3f69-429c-9881-f81c74d83060 192.169.142.0/24 |

| a65bff8f-e397-491b-aa97-955864bec2f9 | demo_private | 69012862-f72e-4cd2-a4fc-4106d431cf2f 70.0.0.0/24 |

+--------------------------------------+--------------+-------------------------------------------------------+

$ nova boot --image "tutum/wordpress" --flavor m1.tiny --key-name osxkey --nic net-id=a65bff8f-e397-491b-aa97-955864bec2f9 WordPress

[root@juno ~(keystone_demo)]# nova list

+--------------------------------------+-----------+---------+------------+-------------+-----------------------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-----------+---------+------------+-------------+-----------------------------------------+

| bf5f7eb9-900d-48bf-a230-275d65813b0f | WordPress | ACTIVE | - | Running | demo_private=70.0.0.16, 192.169.142.153 |

+--------------------------------------+-----------+---------+------------+-------------+-----------------------------------------+

[root@juno ~(keystone_demo)]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

21e84951eabd tutum/wordpress:latest "/run.sh" About an hour ago Up 11 minutes nova-bf5f7eb9-900d-48bf-a230-275d65813b0f

**************************

Starting Wordpress

**************************

Immediately after VM starts (on non-default Libvirts Subnet 192.169.142.0/24) status WordPress is SHUTOFF, so we start WordPress (browser launched to

Juno VM 192.169.142.45 from KVM Hypervisor Server ) :-

Browser launched to WordPress container 192.169.142.153 from KVM Hypervisor Server

**********************************************************************************

**********************************************************************************

*******************************************************************************************

Another sample to demonstrating nova-docker container functionality. Browser launched to Wordpress nova-docker container (192.169.142.155) from KVM Hypervisor Server hosting Libvirt's Subnet

(192.169.142.0/24)

*******************************************************************************************

*****************

MySQL Setup

*****************

# docker pull tutum/mysql

# . keystonerc_admin*****************************

Creating Glance Image

*****************************

# docker save tutum/mysql:latest | glance image-create --is-public=True --container-format=docker --disk-format=raw --name tutum/mysql:latest

****************************************

Starting Nova-Docker container

****************************************

# . keystonerc_demo

# nova boot --image "tutum/mysql:latest" --flavor m1.tiny --key-name osxkey --nic net-id=5fcd01ac-bc8e-450d-be67-f0c274edd041 mysql

[root@ip-192-169-142-45 ~(keystone_demo)]# nova list

+--------------------------------------+---------------+--------+------------+-------------+-----------------------------------------+| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+---------------+--------+------------+-------------+-----------------------------------------+

| 3dbf981f-f28c-4abe-8fd1-09b8b8cad930 | WordPress | ACTIVE | - | Running | demo_network=70.0.0.16, 192.169.142.153 |

| 39eef361-1329-44d9-b05a-f6b4b8693aa3 | mysql | ACTIVE | - | Running | demo_network=70.0.0.19, 192.169.142.155 |

| 626bd8e0-cf1a-4891-aafc-620c464e8a94 | tutum/hipache | ACTIVE | - | Running | demo_network=70.0.0.18, 192.169.142.154 |

+--------------------------------------+---------------+--------+------------+-------------+-----------------------------------------+

[root@ip-192-169-142-45 ~(keystone_demo)]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3da1e94892aa tutum/mysql:latest "/run.sh" 25 seconds ago Up 23 seconds nova-39eef361-1329-44d9-b05a-f6b4b8693aa3

77538873a273 tutum/hipache:latest "/run.sh" 30 minutes ago condescending_leakey

844c75ca5a0e tutum/hipache:latest "/run.sh" 31 minutes ago condescending_turing

f477605840d0 tutum/hipache:latest "/run.sh" 42 minutes ago Up 31 minutes nova-626bd8e0-cf1a-4891-aafc-620c464e8a94

3e2fe064d822 rastasheep/ubuntu-sshd:14.04 "/usr/sbin/sshd -D" About an hour ago Exited (0) About an hour ago test_sshd

8e79f9d8e357 fedora:latest "/bin/bash" About an hour ago Exited (0) About an hour ago evil_colden

9531ab33db8d ubuntu:latest "/bin/bash" About an hour ago Exited (0) About an hour ago angry_bardeen

df6f3c9007a7 tutum/wordpress:latest "/run.sh" 2 hours ago Up About an hour nova-3dbf981f-f28c-4abe-8fd1-09b8b8cad930

[root@ip-192-169-142-45 ~(keystone_demo)]# docker logs 3da1e94892aa

=> An empty or uninitialized MySQL volume is detected in /var/lib/mysql

=> Installing MySQL ...

=> Done!

=> Creating admin user ...

=> Waiting for confirmation of MySQL service startup, trying 0/13 ...

=> Creating MySQL user admin with random password

=> Done!

========================================================================

You can now connect to this MySQL Server using:

mysql -uadmin -pfXs5UarEYaow -h

Please remember to change the above password as soon as possible!

MySQL user 'root' has no password but only allows local connections

========================================================================

141218 20:45:31 mysqld_safe Can't log to error log and syslog at the same time.

Remove all --log-error configuration options for --syslog to take effect.

141218 20:45:31 mysqld_safe Logging to '/var/log/mysql/error.log'.

141218 20:45:31 mysqld_safe Starting mysqld daemon with databases from /var/lib/mysql

[root@ip-192-169-142-45 ~(keystone_demo)]# mysql -uadmin -pfXs5UarEYaow -h 192.169.142.155 -P 3306

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.5.40-0ubuntu0.14.04.1 (Ubuntu)

Copyright (c) 2000, 2014, Oracle, Monty Program Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases ;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

+--------------------+

3 rows in set (0.01 sec)

MySQL [(none)]>

*******************************************

Setup Ubuntu 14.04 with SSH access

*******************************************

# docker pull rastasheep/ubuntu-sshd:14.04

# . keystonerc_admin

# docker save rastasheep/ubuntu-sshd:14.04 | glance image-create --is-public=True --container-format=docker --disk-format=raw --name rastasheep/ubuntu-sshd:14.04

# . keystonerc_demo

# nova boot --image "rastasheep/ubuntu-sshd:14.04" --flavor m1.tiny --key-name osxkey --nic net-id=5fcd01ac-bc8e-450d-be67-f0c274edd041 ubuntuTrusty***********************************************************

Login to dashboard && assign floating IP via dashboard:-

***********************************************************

[root@ip-192-169-142-45 ~(keystone_demo)]# nova list

+--------------------------------------+--------------+---------+------------+-------------+-----------------------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+--------------+---------+------------+-------------+-----------------------------------------+

| 3dbf981f-f28c-4abe-8fd1-09b8b8cad930 | WordPress | SHUTOFF | - | Shutdown | demo_network=70.0.0.16, 192.169.142.153 |

| 7bbf887f-167c-461e-9ee0-dd4d43605c9e | lamp | ACTIVE | - | Running | demo_network=70.0.0.20, 192.169.142.156 |

| 39eef361-1329-44d9-b05a-f6b4b8693aa3 | mysql | SHUTOFF | - | Shutdown | demo_network=70.0.0.19, 192.169.142.155 |

| f21dc265-958e-4ed0-9251-31c4bbab35f4 | ubuntuTrusty | ACTIVE | - | Running | demo_network=70.0.0.21, 192.169.142.157 |

+--------------------------------------+--------------+---------+------------+-------------+-----------------------------------------+

[root@ip-192-169-142-45 ~(keystone_demo)]# ssh root@192.169.142.157

root@192.169.142.157's password:

Last login: Fri Dec 19 09:19:40 2014 from ip-192-169-142-45.ip.secureserver.net

root@instance-0000000d:~# cat /etc/issue

Ubuntu 14.04.1 LTS \n \l

root@instance-0000000d:~# ifconfig

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

nse49711e9-93 Link encap:Ethernet HWaddr fa:16:3e:32:5e:d8

inet addr:70.0.0.21 Bcast:70.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fe32:5ed8/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:2574 errors:0 dropped:0 overruns:0 frame:0

TX packets:1653 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2257920 (2.2 MB) TX bytes:255582 (255.5 KB)

root@instance-0000000d:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/docker-253:1-4600578-76893e146987bf4b58b42ff6ed80892df938ffba108f22c7a4591b18990e0438 9.8G 302M 9.0G 4% /

tmpfs 1.9G 0 1.9G 0% /dev

shm 64M 0 64M 0% /dev/shm

/dev/mapper/centos-root 36G 9.8G 26G 28% /etc/hosts

tmpfs 1.9G 0 1.9G 0% /run/secrets

tmpfs 1.9G 0 1.9G 0% /proc/kcore

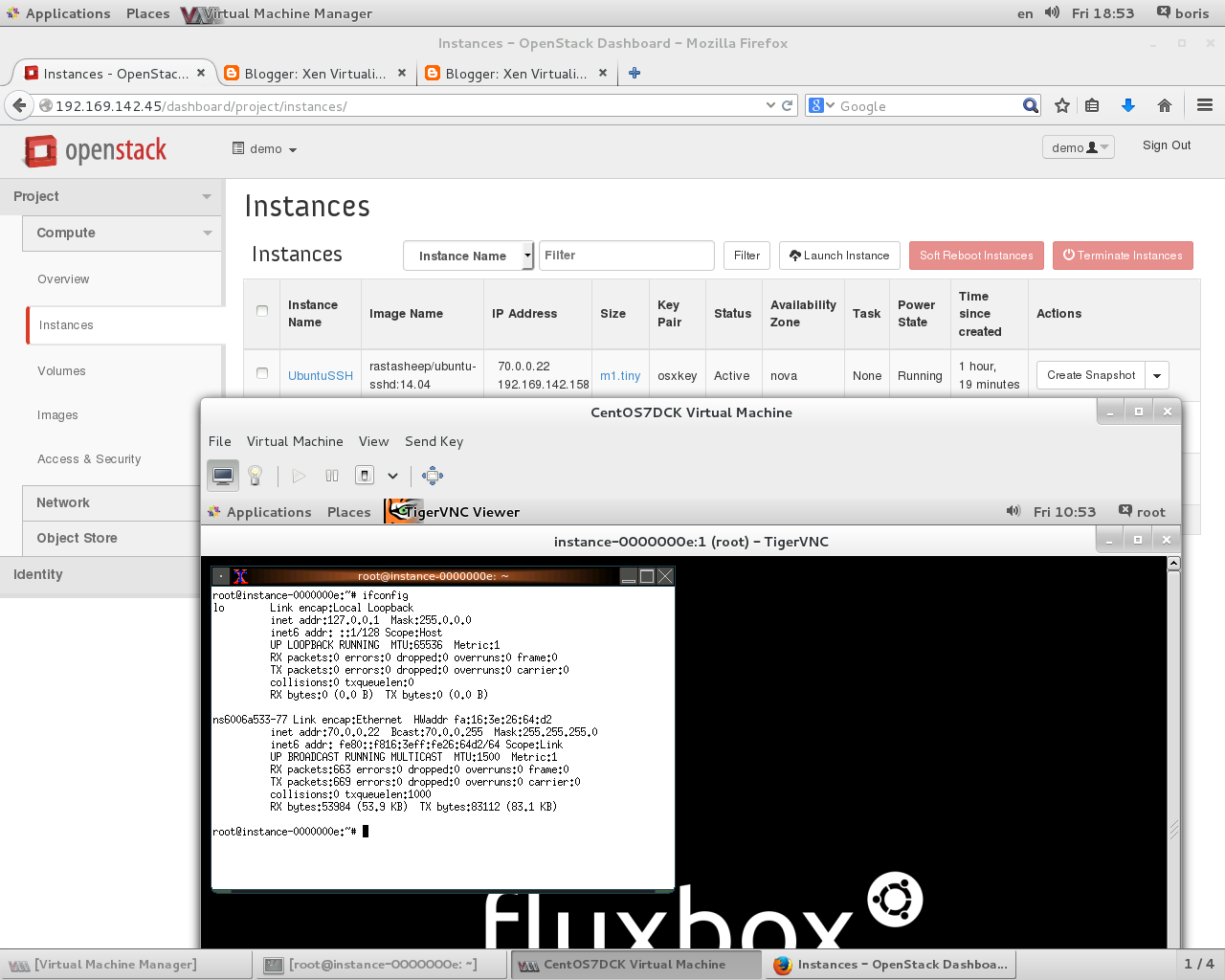

************************************************************

Set up VNC with Ubuntu SSH nova-docker container

************************************************************

root@instance-0000000e:~# cat /etc/issue

Ubuntu 14.04.1 LTS \n \l

root@instance-0000000e:~#apt-get install xorg fluxbox vnc4server -y

root@instance-0000000e:~# echo "exec fluxbox">> ~/.xinitrc

root@instance-0000000e:~# ifconfig

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

ns6006a533-77 Link encap:Ethernet HWaddr fa:16:3e:26:64:d2

inet addr:70.0.0.22 Bcast:70.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:fe26:64d2/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4284 errors:0 dropped:0 overruns:0 frame:0

TX packets:5771 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:706698 (706.6 KB) TX bytes:2866607 (2.8 MB)

root@instance-0000000e:~# ps -ef | grep vnc

root 33 1 0 15:48 pts/0 00:00:02 Xvnc4 :1 -desktop instance-0000000e:1 (root) -auth /root/.Xauthority -geometry 1024x768 -depth 16 -rfbwait 30000 -rfbauth /root/.vnc/passwd -rfbport 5901 -pn -fp /usr/X11R6/lib/X11/fonts/Type1/,/usr/X11R6/lib/X11/fonts/Speedo/,/usr/X11R6/lib/X11/fonts/misc/,/usr/X11R6/lib/X11/fonts/75dpi/,/usr/X11R6/lib/X11/fonts/100dpi/,/usr/share/fonts/X11/misc/,/usr/share/fonts/X11/Type1/,/usr/share/fonts/X11/75dpi/,/usr/share/fonts/X11/100dpi/ -co /etc/X11/rgb

root 39 1 0 15:48 pts/0 00:00:00 vncconfig -iconic

root 148 9 0 15:59 pts/0 00:00:00 grep --color=auto vnc

*************************************************

Testing TomCat in Nova-Docker Container

**************************************************

[root@junodocker ~]# docker pull tutum/tomcat

Pulling repository tutum/tomcat

02e84f04100e: Download complete

511136ea3c5a: Download complete

1c9383292a8f: Download complete

9942dd43ff21: Download complete

d92c3c92fa73: Download complete

0ea0d582fd90: Download complete

cc58e55aa5a5: Download complete

c4ff7513909d: Download complete

3e9890d3403b: Download complete

b9192a10c580: Download complete

28e5e6a80860: Download complete

2128079f9b8f: Download complete

1a9cd5ad5ba2: Download complete

a4432dd90a00: Download complete

9e76a879f59b: Download complete

1cd5071591ec: Download complete

abc71ecb910e: Download complete

4f2b619579e3: Download complete

7907e64b4ca0: Download complete

80446f8c6fc0: Download complete

747d7f2e49a2: Download complete

c6e054e6696d: Download complete

Status: Downloaded newer image for tutum/tomcat:latest

[root@junodocker ~]# . keystonerc_admin

[root@junodocker ~(keystone_admin)]# docker save tutum/tomcat:latest | glance image-create --is-public=True --container-format=docker --disk-format=raw --name tutum/tomcat:latest

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | a96192a982d17a1b63d81ff28eda07fe |

| container_format | docker |

| created_at | 2014-12-25T13:20:44 |

| deleted | False |

| deleted_at | None |

| disk_format | raw |

| id | a18ef105-74af-4b05-a5e7-76425de7b9fb |

| is_public | True |

| min_disk | 0 |

| min_ram | 0 |

| name | tutum/tomcat:latest |

| owner | 304f8ad83c414cb79c2af21d2c89880d |

| protected | False |

| size | 552029184 |

| status | active |

| updated_at | 2014-12-25T13:21:29 |

| virtual_size | None |

+------------------+--------------------------------------+

***************************

Deployed via dashboard

***************************

[root@junodocker ~(keystone_admin)]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bc6b30f07717 tutum/tomcat:latest "/run.sh" 2 minutes ago Up 2 minutes nova-bc5ed111-aa9e-44e7-a5ba-d650d46bfcd7

3527cc82e982 eugeneware/docker-wordpress-nginx:latest "/bin/bash /start.sh 2 days ago Up 2 hours nova-d55a0876-acf5-4af7-9240-4e44810ebb21

009d1cadcab5 rastasheep/ubuntu-sshd:14.04 "/usr/sbin/sshd -D" 2 days ago Up About an hour nova-716e0421-8e56-4b19-a447-b39e5aedbc6b

b23f9d238a2a rastasheep/ubuntu-sshd:14.04 "/usr/sbin/sshd -D" 5 days ago Exited (0) 2 days ago nova-ae40aae3-c148-4def-8a4b-0adf69e38f8d

[root@junodocker ~(keystone_admin)]# docker logs bc6b30f07717

=> Creating and admin user with a random password in Tomcat

=> Done!

===============================================

You can now configure to this Tomcat server using:

admin:8VMttCo2lE5O

===============================================

Another sample to test kumarpraveen/fedora-sshd

View https://registry.hub.docker.com/u/kumarpraveen/fedora-sshd/

References