UPDATE 04/03/2016

In meantime use repositories for RC1, rather then Delorean trunks

END UPDATE

DVR && Nova-Docker Driver (stable/mitaka) tested fine on RDO Mitaka (build 20160329) with no issues described in previous post for RDO Liberty

So, create DVR deployment with Contrpoller/Network + N(*)Compute Nodes. Switch to Docker Hypervisor on each Compute Node and make requiered updates to glance and filters file on Controller. You are all set. Nova-Dockers instances FIP(s) are available from outside via Neutron Distributed Router (DNAT) using "fg" inteface ( fip-namespace ) residing on same host as Docker Hypervisor. South-North traffic is not related with VXLAN tunneling on DVR systems.

Why DVR come into concern ?

Refreshing in memory similar problem with Nova-Docker Driver (Kilo)

with which I had same kind of problems (VXLAN connection Controller <==> Compute) on F22 (OVS 2.4.0) when the same driver worked fine on CentOS 7.1 (OVS 2.3.1). I just guess that Nova-Docker driver has a problem with OVS 2.4.0 no matter of stable/kilo, stable/liberty, stable/mitaka branches been checked out for driver build.

I have to notice that issue is related specifically with ML2&OVS&VXLAN setup,

RDO Mitaka deployment ML2&OVS&VLAN works with Nova-Docker (stable/mitaka) with no problems.

I have not run ovs-ofctl dump-flows at br-tun bridges ant etc,

because even having proved malfunctinality I cannot file it to BZ.

Nova-Docker Driver is not packaged for RDO so it's upstream stuff,

Upstream won't consider issue which involves build driver from source

on RDO Mitaka (RC1).

Thus as quick and efficient workaround I suggest DVR deployment setup,

to kill two birds with one stone. It will result South-North traffic

to be forwarded right away from host running Docker Hypervisor to Internet

and vice/versa due to basic "fg" functionality ( outgoing interface of

fip-namespace,residing on Compute node having L3 agent running in "dvr"

agent_mode ).

**************************

Procedure in details

**************************

First install repositories for RDO Mitaka (the most recent build passed CI):-

# yum -y install yum-plugin-priorities

# cd /etc/yum.repos.d

# curl -O https://trunk.rdoproject.org/centos7-mitaka/delorean-deps.repo

# curl -O https://trunk.rdoproject.org/centos7-mitaka/current-passed-ci/delorean.repo

# yum -y install openstack-packstack (Controller only)

Now proceed as follows :-

1. Here is Answer file to deploy pre DVR Cluster

2. Convert cluster to DVR as advised in "RDO Liberty DVR Neutron workflow on CentOS 7.2"

http://dbaxps.blogspot.com/2015/10/rdo-liberty-rc-dvr-deployment.html

Just one notice on RDO Mitaka on each compute node run

# ovs-vsctl add-br br-ex

# ovs-vsctl add-port br-ex eth0

Then configure

***********************************************************

On Controller (X=2) and Computes X=(3,4) update :-

***********************************************************

# cat ifcfg-br-ex

DEVICE="br-ex"

BOOTPROTO="static"

IPADDR="192.169.142.1(X)7"

NETMASK="255.255.255.0"

DNS1="83.221.202.254"

BROADCAST="192.169.142.255"

GATEWAY="192.169.142.1"

NM_CONTROLLED="no"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="yes"

IPV6INIT=no

ONBOOT="yes"

TYPE="OVSIntPort"

OVS_BRIDGE=br-ex

DEVICETYPE="ovs"

# cat ifcfg-eth0

DEVICE="eth0"

ONBOOT="yes"

TYPE="OVSPort"

DEVICETYPE="ovs"

OVS_BRIDGE=br-ex

NM_CONTROLLED=no

IPV6INIT=no

***************************

Then run script

***************************

#!/bin/bash -x

chkconfig network on

systemctl stop NetworkManager

systemctl disable NetworkManager

service network restart

Reboot node.

**********************************************

Nova-Docker Setup on each Compute

**********************************************

# curl -sSL https://get.docker.com/ | sh

# usermod -aG docker nova ( seems not help to set 660 for docker.sock )

# systemctl start docker

# systemctl enable docker

# chmod 666 /var/run/docker.sock (add to /etc/rc.d/rc.local)

# easy_install pip

# git clone -b stable/mitaka https://github.com/openstack/nova-docker

*******************

Driver build

*******************

# cd nova-docker

# pip install -r requirements.txt

# python setup.py install

********************************************

Switch nova-compute to DockerDriver

********************************************

vi /etc/nova/nova.conf

compute_driver=novadocker.virt.docker.DockerDriver

******************************************************************

Next on Controller/Network Node and each Compute Node

******************************************************************

mkdir /etc/nova/rootwrap.d

vi /etc/nova/rootwrap.d/docker.filters

[Filters]

# nova/virt/docker/driver.py: 'ln', '-sf', '/var/run/netns/.*'

ln: CommandFilter, /bin/ln, root

**********************************************************

Nova Compute Service restart on Compute Nodes

**********************************************************

# systemctl restart openstack-nova-compute

***********************************************

Glance API Service restart on Controller

**********************************************

vi /etc/glance/glance-api.conf

container_formats=ami,ari,aki,bare,ovf,ova,docker

# systemctl restart openstack-glance-api

**************************************************

Network flow on Compute in a bit more details

**************************************************

When floating IP gets assigned to VM , what actually happens ( [1] ) :-

The same explanation may be found in ([4]) , the only style would not be in step by step manner, in particular it contains detailed description of reverse network flow and ARP Proxy functionality.

1.The fip- namespace is created on the local compute node

(if it does not already exist)

2.A new port rfp- gets created on the qrouter- namespace

(if it does not already exist)

3.The rfp port on the qrouter namespace is assigned the associated floating IP address

4.The fpr port on the fip namespace gets created and linked via point-to-point network to the rfp port of the qrouter namespace

5.The fip namespace gateway port fg- is assigned an additional address

from the public network range to set up ARP proxy point

6.The fg- is configured as a Proxy ARP

*********************

Flow itself ( [1] ):

*********************

1.The VM, initiating transmission, sends a packet via default gateway

and br-int forwards the traffic to the local DVR gateway port (qr-).

2.DVR routes the packet using the routing table to the rfp- port

3.The packet is applied NAT rule, replacing the source-IP of VM to

the assigned floating IP, and then it gets sent through the rfp- port,

which connects to the fip namespace via point-to-point network

169.254.31.28/31

4. The packet is received on the fpr- port in the fip namespace

and then routed outside through the fg- port

Two Node deployment snapshots (Controller/Network + Compute)

Three Node deployment snapshots (Controller/Network + 2*Compute)

**************************************************************************************

Build on Compute GlassFish 4.1 docker image per

http://bderzhavets.blogspot.com/2015/01/hacking-dockers-phusionbaseimage-to.html and upload to glance :-

**************************************************************************************

[root@ip-192-169-142-137 ~(keystone_admin)]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

derby/docker-glassfish41 latest 3a6b84ec9206 About a minute ago 1.155 GB

rastasheep/ubuntu-sshd latest 70e0ac74c691 2 days ago 251.6 MB

phusion/baseimage latest 772dd063a060 3 months ago 305.1 MB

tutum/tomcat latest 2edd730bbedd 7 months ago 539.9 MB

larsks/thttpd latest a31ab5050b67 15 months ago 1.058 MB

[root@ip-192-169-142-137 ~(keystone_admin)]# docker save derby/docker-glassfish41 | openstack image create derby/docker-glassfish41 --public --container-format docker --disk-format raw

+------------------+------------------------------------------------------+

| Field | Value |

+------------------+------------------------------------------------------+

| checksum | 9bea6dd0bcd8d0d7da2d82579c0e658a |

| container_format | docker |

| created_at | 2016-04-01T14:29:20Z |

| disk_format | raw |

| file | /v2/images/acf03d15-b7c5-4364-b00f-603b6a5d9af2/file |

| id | acf03d15-b7c5-4364-b00f-603b6a5d9af2 |

| min_disk | 0 |

| min_ram | 0 |

| name | derby/docker-glassfish41 |

| owner | 31b24d4b1574424abe53b9a5affc70c8 |

| protected | False |

| schema | /v2/schemas/image |

| size | 1175020032 |

| status | active |

| tags | |

| updated_at | 2016-04-01T14:30:13Z |

| virtual_size | None |

| visibility | public |

+------------------+------------------------------------------------------+

[root@ip-192-169-142-137 ~(keystone_admin)]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8f551d35f2d7 derby/docker-glassfish41 "/sbin/my_init" 39 seconds ago Up 31 seconds nova-faba725e-e031-4edb-bf2c-41c6dfc188c1

dee4425261e8 tutum/tomcat "/run.sh" About an hour ago Up About an hour nova-13450558-12d7-414c-bcd2-d746495d7a57

41d2ebc54d75 rastasheep/ubuntu-sshd "/usr/sbin/sshd -D" 2 hours ago Up About an hour nova-04ddea42-10a3-4a08-9f00-df60b5890ee9

[root@ip-192-169-142-137 ~(keystone_admin)]# docker logs 8f551d35f2d7

*** Running /etc/my_init.d/00_regen_ssh_host_keys.sh...

No SSH host key available. Generating one...

*** Running /etc/my_init.d/01_sshd_start.sh...

Creating SSH2 RSA key; this may take some time ...

Creating SSH2 DSA key; this may take some time ...

Creating SSH2 ECDSA key; this may take some time ...

Creating SSH2 ED25519 key; this may take some time ...

invoke-rc.d: policy-rc.d denied execution of restart.

SSH KEYS regenerated by Boris just in case !

SSHD started !

*** Running /etc/my_init.d/database.sh...

Derby database started !

*** Running /etc/my_init.d/run.sh...

Bad Network Configuration. DNS can not resolve the hostname:

java.net.UnknownHostException: instance-00000006: instance-00000006: unknown error

Waiting for domain1 to start ......

Successfully started the domain : domain1

domain Location: /opt/glassfish4/glassfish/domains/domain1

Log File: /opt/glassfish4/glassfish/domains/domain1/logs/server.log

Admin Port: 4848

Command start-domain executed successfully.

=> Modifying password of admin to random in Glassfish

spawn asadmin --user admin change-admin-password

Enter the admin password>

Enter the new admin password>

Enter the new admin password again>

Command change-admin-password executed successfully.

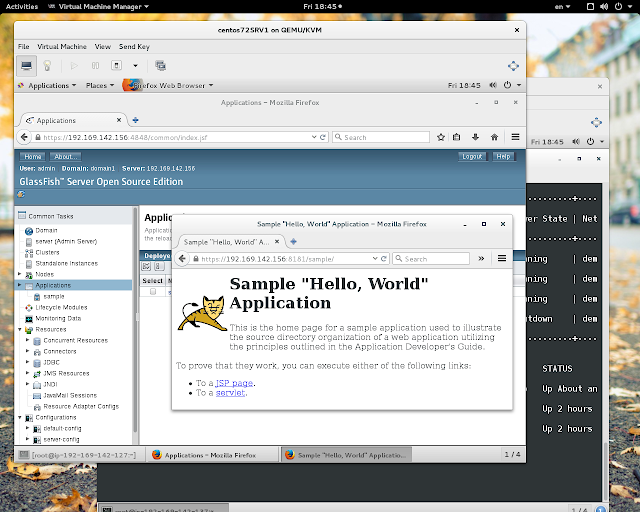

Fairly hard docker image been built by "docker expert" as myself ;)

gets launched and nova-docker instance seems to run properly

several daemons at a time ( sshd enabled )

[boris@fedora23wks Downloads]$ ssh root@192.169.142.156

root@192.169.142.156's password:

Last login: Fri Apr 1 15:33:06 2016 from 192.169.142.1

root@instance-00000006:~# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 14:32 ? 00:00:00 /usr/bin/python3 -u /sbin/my_init

root 100 1 0 14:33 ? 00:00:00 /bin/bash /etc/my_init.d/run.sh

root 103 1 0 14:33 ? 00:00:00 /usr/sbin/sshd

root 170 1 0 14:33 ? 00:00:03 /opt/jdk1.8.0_25/bin/java -Djava.library.path=/op

root 427 100 0 14:33 ? 00:00:02 java -jar /opt/glassfish4/bin/../glassfish/lib/cl

root 444 427 2 14:33 ? 00:01:23 /opt/jdk1.8.0_25/bin/java -cp /opt/glassfish4/gla

root 1078 0 0 15:32 ? 00:00:00 bash

root 1110 103 0 15:33 ? 00:00:00 sshd: root@pts/0

root 1112 1110 0 15:33 pts/0 00:00:00 -bash

root 1123 1112 0 15:33 pts/0 00:00:00 ps -ef

Glassfish is running indeed

In meantime use repositories for RC1, rather then Delorean trunks

END UPDATE

DVR && Nova-Docker Driver (stable/mitaka) tested fine on RDO Mitaka (build 20160329) with no issues described in previous post for RDO Liberty

So, create DVR deployment with Contrpoller/Network + N(*)Compute Nodes. Switch to Docker Hypervisor on each Compute Node and make requiered updates to glance and filters file on Controller. You are all set. Nova-Dockers instances FIP(s) are available from outside via Neutron Distributed Router (DNAT) using "fg" inteface ( fip-namespace ) residing on same host as Docker Hypervisor. South-North traffic is not related with VXLAN tunneling on DVR systems.

Why DVR come into concern ?

Refreshing in memory similar problem with Nova-Docker Driver (Kilo)

with which I had same kind of problems (VXLAN connection Controller <==> Compute) on F22 (OVS 2.4.0) when the same driver worked fine on CentOS 7.1 (OVS 2.3.1). I just guess that Nova-Docker driver has a problem with OVS 2.4.0 no matter of stable/kilo, stable/liberty, stable/mitaka branches been checked out for driver build.

I have to notice that issue is related specifically with ML2&OVS&VXLAN setup,

RDO Mitaka deployment ML2&OVS&VLAN works with Nova-Docker (stable/mitaka) with no problems.

I have not run ovs-ofctl dump-flows at br-tun bridges ant etc,

because even having proved malfunctinality I cannot file it to BZ.

Nova-Docker Driver is not packaged for RDO so it's upstream stuff,

Upstream won't consider issue which involves build driver from source

on RDO Mitaka (RC1).

Thus as quick and efficient workaround I suggest DVR deployment setup,

to kill two birds with one stone. It will result South-North traffic

to be forwarded right away from host running Docker Hypervisor to Internet

and vice/versa due to basic "fg" functionality ( outgoing interface of

fip-namespace,residing on Compute node having L3 agent running in "dvr"

agent_mode ).

**************************

Procedure in details

**************************

First install repositories for RDO Mitaka (the most recent build passed CI):-

# yum -y install yum-plugin-priorities

# cd /etc/yum.repos.d

# curl -O https://trunk.rdoproject.org/centos7-mitaka/delorean-deps.repo

# curl -O https://trunk.rdoproject.org/centos7-mitaka/current-passed-ci/delorean.repo

# yum -y install openstack-packstack (Controller only)

Now proceed as follows :-

1. Here is Answer file to deploy pre DVR Cluster

2. Convert cluster to DVR as advised in "RDO Liberty DVR Neutron workflow on CentOS 7.2"

http://dbaxps.blogspot.com/2015/10/rdo-liberty-rc-dvr-deployment.html

Just one notice on RDO Mitaka on each compute node run

# ovs-vsctl add-br br-ex

# ovs-vsctl add-port br-ex eth0

Then configure

***********************************************************

On Controller (X=2) and Computes X=(3,4) update :-

***********************************************************

# cat ifcfg-br-ex

DEVICE="br-ex"

BOOTPROTO="static"

IPADDR="192.169.142.1(X)7"

NETMASK="255.255.255.0"

DNS1="83.221.202.254"

BROADCAST="192.169.142.255"

GATEWAY="192.169.142.1"

NM_CONTROLLED="no"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="yes"

IPV6INIT=no

ONBOOT="yes"

TYPE="OVSIntPort"

OVS_BRIDGE=br-ex

DEVICETYPE="ovs"

# cat ifcfg-eth0

DEVICE="eth0"

ONBOOT="yes"

TYPE="OVSPort"

DEVICETYPE="ovs"

OVS_BRIDGE=br-ex

NM_CONTROLLED=no

IPV6INIT=no

***************************

Then run script

***************************

#!/bin/bash -x

chkconfig network on

systemctl stop NetworkManager

systemctl disable NetworkManager

service network restart

Reboot node.

**********************************************

Nova-Docker Setup on each Compute

**********************************************

# curl -sSL https://get.docker.com/ | sh

# usermod -aG docker nova ( seems not help to set 660 for docker.sock )

# systemctl start docker

# systemctl enable docker

# chmod 666 /var/run/docker.sock (add to /etc/rc.d/rc.local)

# easy_install pip

# git clone -b stable/mitaka https://github.com/openstack/nova-docker

*******************

Driver build

*******************

# cd nova-docker

# pip install -r requirements.txt

# python setup.py install

********************************************

Switch nova-compute to DockerDriver

********************************************

vi /etc/nova/nova.conf

compute_driver=novadocker.virt.docker.DockerDriver

******************************************************************

Next on Controller/Network Node and each Compute Node

******************************************************************

mkdir /etc/nova/rootwrap.d

vi /etc/nova/rootwrap.d/docker.filters

[Filters]

# nova/virt/docker/driver.py: 'ln', '-sf', '/var/run/netns/.*'

ln: CommandFilter, /bin/ln, root

**********************************************************

Nova Compute Service restart on Compute Nodes

**********************************************************

# systemctl restart openstack-nova-compute

***********************************************

Glance API Service restart on Controller

**********************************************

vi /etc/glance/glance-api.conf

container_formats=ami,ari,aki,bare,ovf,ova,docker

# systemctl restart openstack-glance-api

**************************************************

Network flow on Compute in a bit more details

**************************************************

When floating IP gets assigned to VM , what actually happens ( [1] ) :-

The same explanation may be found in ([4]) , the only style would not be in step by step manner, in particular it contains detailed description of reverse network flow and ARP Proxy functionality.

1.The fip-

(if it does not already exist)

2.A new port rfp-

(if it does not already exist)

3.The rfp port on the qrouter namespace is assigned the associated floating IP address

4.The fpr port on the fip namespace gets created and linked via point-to-point network to the rfp port of the qrouter namespace

5.The fip namespace gateway port fg-

from the public network range to set up ARP proxy point

6.The fg-

*********************

Flow itself ( [1] ):

*********************

1.The VM, initiating transmission, sends a packet via default gateway

and br-int forwards the traffic to the local DVR gateway port (qr-

2.DVR routes the packet using the routing table to the rfp-

3.The packet is applied NAT rule, replacing the source-IP of VM to

the assigned floating IP, and then it gets sent through the rfp-

which connects to the fip namespace via point-to-point network

169.254.31.28/31

4. The packet is received on the fpr-

and then routed outside through the fg-

Two Node deployment snapshots (Controller/Network + Compute)

Three Node deployment snapshots (Controller/Network + 2*Compute)

**************************************************************************************

Build on Compute GlassFish 4.1 docker image per

**************************************************************************************

[root@ip-192-169-142-137 ~(keystone_admin)]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

derby/docker-glassfish41 latest 3a6b84ec9206 About a minute ago 1.155 GB

rastasheep/ubuntu-sshd latest 70e0ac74c691 2 days ago 251.6 MB

phusion/baseimage latest 772dd063a060 3 months ago 305.1 MB

tutum/tomcat latest 2edd730bbedd 7 months ago 539.9 MB

larsks/thttpd latest a31ab5050b67 15 months ago 1.058 MB

[root@ip-192-169-142-137 ~(keystone_admin)]# docker save derby/docker-glassfish41 | openstack image create derby/docker-glassfish41 --public --container-format docker --disk-format raw

+------------------+------------------------------------------------------+

| Field | Value |

+------------------+------------------------------------------------------+

| checksum | 9bea6dd0bcd8d0d7da2d82579c0e658a |

| container_format | docker |

| created_at | 2016-04-01T14:29:20Z |

| disk_format | raw |

| file | /v2/images/acf03d15-b7c5-4364-b00f-603b6a5d9af2/file |

| id | acf03d15-b7c5-4364-b00f-603b6a5d9af2 |

| min_disk | 0 |

| min_ram | 0 |

| name | derby/docker-glassfish41 |

| owner | 31b24d4b1574424abe53b9a5affc70c8 |

| protected | False |

| schema | /v2/schemas/image |

| size | 1175020032 |

| status | active |

| tags | |

| updated_at | 2016-04-01T14:30:13Z |

| virtual_size | None |

| visibility | public |

+------------------+------------------------------------------------------+

[root@ip-192-169-142-137 ~(keystone_admin)]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8f551d35f2d7 derby/docker-glassfish41 "/sbin/my_init" 39 seconds ago Up 31 seconds nova-faba725e-e031-4edb-bf2c-41c6dfc188c1

dee4425261e8 tutum/tomcat "/run.sh" About an hour ago Up About an hour nova-13450558-12d7-414c-bcd2-d746495d7a57

41d2ebc54d75 rastasheep/ubuntu-sshd "/usr/sbin/sshd -D" 2 hours ago Up About an hour nova-04ddea42-10a3-4a08-9f00-df60b5890ee9

[root@ip-192-169-142-137 ~(keystone_admin)]# docker logs 8f551d35f2d7

*** Running /etc/my_init.d/00_regen_ssh_host_keys.sh...

No SSH host key available. Generating one...

*** Running /etc/my_init.d/01_sshd_start.sh...

Creating SSH2 RSA key; this may take some time ...

Creating SSH2 DSA key; this may take some time ...

Creating SSH2 ECDSA key; this may take some time ...

Creating SSH2 ED25519 key; this may take some time ...

invoke-rc.d: policy-rc.d denied execution of restart.

SSH KEYS regenerated by Boris just in case !

SSHD started !

*** Running /etc/my_init.d/database.sh...

Derby database started !

*** Running /etc/my_init.d/run.sh...

Bad Network Configuration. DNS can not resolve the hostname:

java.net.UnknownHostException: instance-00000006: instance-00000006: unknown error

Waiting for domain1 to start ......

Successfully started the domain : domain1

domain Location: /opt/glassfish4/glassfish/domains/domain1

Log File: /opt/glassfish4/glassfish/domains/domain1/logs/server.log

Admin Port: 4848

Command start-domain executed successfully.

=> Modifying password of admin to random in Glassfish

spawn asadmin --user admin change-admin-password

Enter the admin password>

Enter the new admin password>

Enter the new admin password again>

Command change-admin-password executed successfully.

Fairly hard docker image been built by

gets launched and nova-docker instance seems to run properly

several daemons at a time ( sshd enabled )

[boris@fedora23wks Downloads]$ ssh root@192.169.142.156

root@192.169.142.156's password:

Last login: Fri Apr 1 15:33:06 2016 from 192.169.142.1

root@instance-00000006:~# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 14:32 ? 00:00:00 /usr/bin/python3 -u /sbin/my_init

root 100 1 0 14:33 ? 00:00:00 /bin/bash /etc/my_init.d/run.sh

root 103 1 0 14:33 ? 00:00:00 /usr/sbin/sshd

root 170 1 0 14:33 ? 00:00:03 /opt/jdk1.8.0_25/bin/java -Djava.library.path=/op

root 427 100 0 14:33 ? 00:00:02 java -jar /opt/glassfish4/bin/../glassfish/lib/cl

root 444 427 2 14:33 ? 00:01:23 /opt/jdk1.8.0_25/bin/java -cp /opt/glassfish4/gla

root 1078 0 0 15:32 ? 00:00:00 bash

root 1110 103 0 15:33 ? 00:00:00 sshd: root@pts/0

root 1112 1110 0 15:33 pts/0 00:00:00 -bash

root 1123 1112 0 15:33 pts/0 00:00:00 ps -ef

Glassfish is running indeed